The OpenTPU project has emerged as a fascinating attempt to reverse-engineer Google's specialized AI chip, but online commentators are quick to point out its complexities and potential misunderstandings.

The core of the debate centers on the nuanced differences between various TPU generations. One commentator highlighted a critical distinction between the Edge TPU used in smaller devices and the datacenter TPU designed for large-scale neural network computations. This isn't just a minor technical detail, but a fundamental architectural difference that could significantly impact the project's approach.

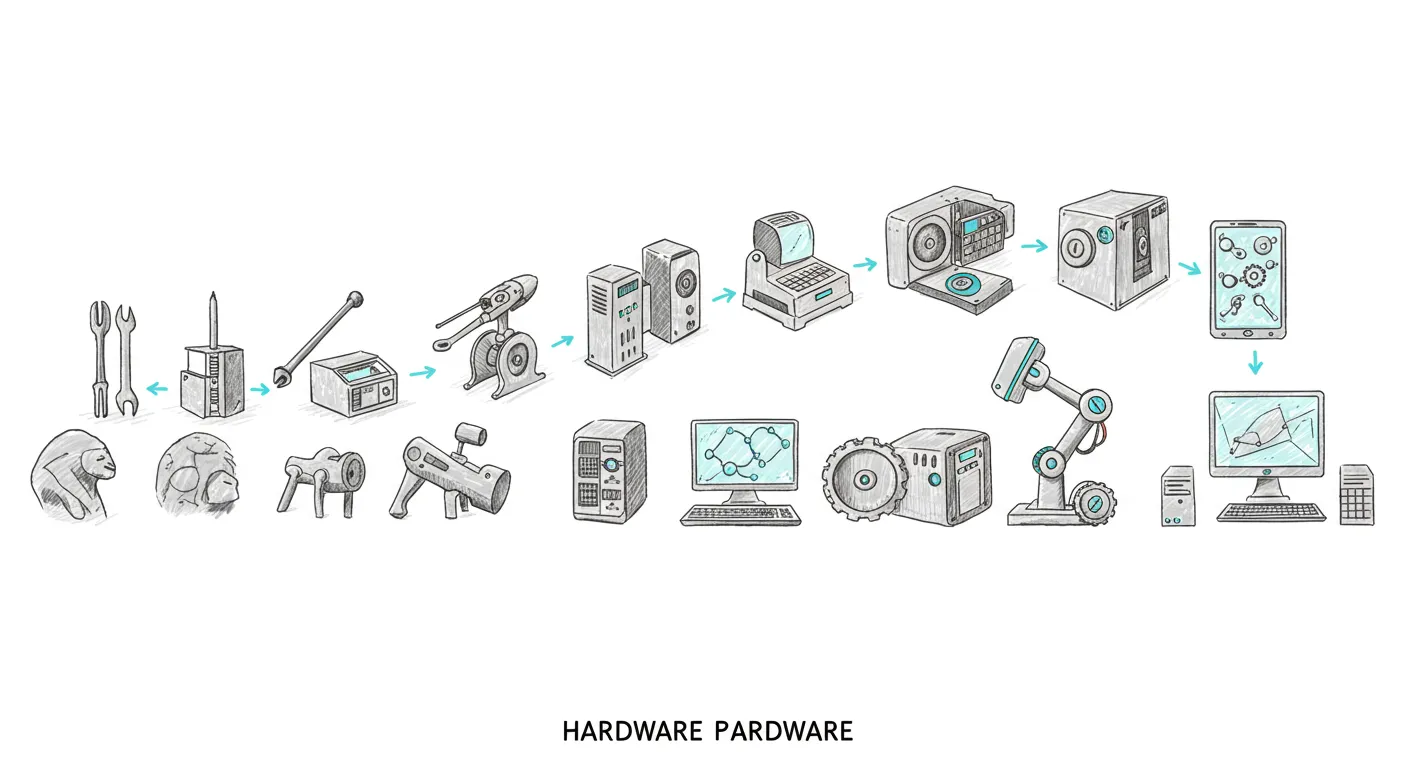

Interestingly, the project seems to be drawing from older TPU designs, particularly those from around 2015-2017. The original TPU was revolutionary in its simplicity – a custom ASIC (Application-Specific Integrated Circuit) focused on neural network inference, with a massive 65,536 8-bit matrix multiply unit capable of 92 TeraOps per second.

The open-source community's interest is palpable, with discussions spanning multiple platforms and referencing Google's original technical papers. However, there's a healthy skepticism about the project's current state and its ability to accurately capture the TPU's complex design.

Perhaps most telling is the ongoing dialogue about the TPU's evolution. What started as a relatively simple inference accelerator has become a sophisticated piece of AI infrastructure, making any open-source reimplementation a challenging but potentially groundbreaking endeavor.